I don’t remember exactly when my craze towards wallpapers, screen savers, posters and abstract designs started. I remember during the late ’90s, how I loved to watch the Windows 98 wallpapers, especially the ones that had an image of clouds on it. Whenever the machine started and clouds appeared on the screen, I got excited. Before playing games on my computer, I used to sit and watch the wallpaper for some time. Also, I loved changing wallpapers and screen savers from time to time. Specifically, one screen saver which had me excited was the 3d pipes screen saver. I remain awestruck by the default screen savers and wallpapers in all my devices I have used till now. I just never get bored. I used to dream, how great it would be to have those designs in my clothes and wearables.

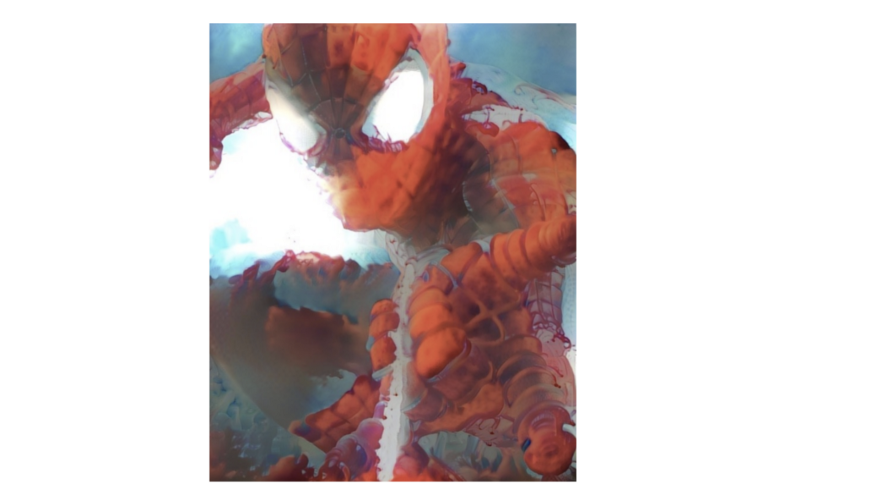

When I had been introduced to comics and movies, what excited me the most were the covers of comics, movie posters, concept arts and backgrounds compared to the stories. Especially movies like Tron, Starwars, cyberpunk etc were my favourites. I used to think if these cover designs could be integrated into things that I used in my daily life, it would be fascinating. The abstract blows, bombs bursting, fire explosions, patterns and art created out of these had always kept me excited and I watched it over and over again and also collected it. I have the habit of buying magazines, not just for reading, but more importantly for the covers.

This thought process has always been in my brain. Then came this idea, of how it would be if I could collect all those images and feed it to a machine, and it will generate personalised designs for me. With all the advancements in technology and printing, why couldn’t this be possible?

I am not an artist nor a designer and I am neither an AI programmer. With a couple of high-level technical knowledge and inspirations, I started exploring Artificial Intelligence, to take my idea to a prototype model.

And while exploring, I found the website: https://deepart.io/,where style transfer happens when images and paintings are given as inputs. I became excited when I saw this deep learning technology, that was in the direction of my thoughts.

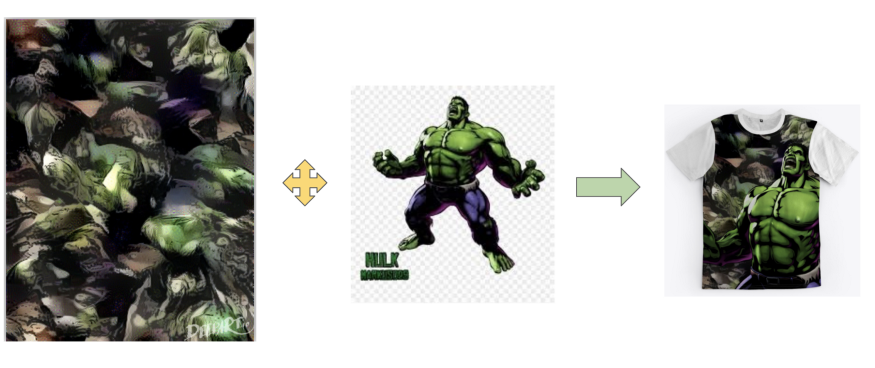

The outcome generated by mixing and matching different images and arts were interesting. Certain output images even stood out when made into apparels.

While exploring deep learning, I got to know about a Neural network concept called Generative Adversarial Neural network(GAN’s). I started wearing a data scientist hat, I wanted to know what is happening in depth. After a certain point, as I kept digging deeper into understanding deep learning, I ended up in mathematics, especially statistics which has been an absolute nightmare since my childhood.

Then I found there were ways to execute this by just using already available GAN python programs in Github. Every research paper I had read and every video I had seen, was mostly about style transfer of images. For example horses to the zebra, apple to an orange. There was a dead end again.

I took a break from that perspective and moved to Art and design, wearing my Artist Hat. I applied AI-generated images on white T-shirts. The results were amazing. I found myself in a dilemma, whether to directly create an AI T-shirt or just an AI-generated image which can be applied on a plain t-shirt using UI techniques. With the help of my Wowgic friend we created a simple application for the latter: https://wowgic.com

Next, I wore my AI programmer hat, when I came across a Github implementation” https://github.com/micah5/sneaker-generator”, where the developer had used the images related to song lyrics in creating designs for sneakers. This made me a little happy to play around with this concept, I downloaded the code. Instead of shoe images, I gave images of patterns which I liked and trained the model for a long period, at times even overnight. When I woke up the next morning, I was eager to see the designs generated. I hoped that AI will automatically come up with new aesthetic designs. But all that I found were weird images without any pattern. It was no way related to images that I used for training the model. It was just pixelated. I repeated my training by giving a different set of images, without even tweaking the code a bit. At this point, I was completely unaware of how GAN’s work.

I wondered how to introduce the aesthetic aspect to AI. I then convinced myself saying, art is subjective. Design liked by one may not be liked by another. The priority was to get high-quality images (without pixelation) of personalised design. I took further steps to get deeper into the understanding of GAN’s. That is when I realised GAN’s is nothing but a photocopy generative algorithm, it basically tries to generate a duplicate of the original by battling between true and false pixels. So the training images were a very important factor. I went back to “Github sneaker implementation” and saw the set of images used for training. They were of the same size with a white background. The shoes that were of different colours and designs were placed in the exact same position(middle) in the image.

After getting to know data acquisition is the key, I tried scraping images from google for my personalized search criteria using Github code “https://github.com/debadridtt/Scraping-Google-Images-using-Python”. Only around 40% of the images were to my liking (aesthetically). Even most of the images that I liked were not appropriate for training the model because of their shape, size and pixel attributes. So my search for the proper dataset continued.

While searching for datasets, one of my friends shared a website https://thistshirtcompanydoesnotexist.com/, in which the AI startup sold T-shirts with designs created using the google doodle dataset, this again gave me the confidence to try using those google doodle dataset and create quirky designs.

Finally, even though the output I could generate looked better, the designs that I would like to wear could not be achieved yet. Here I realised that the AI model to personalize a design involves huge complexity, a large amount of high-quality data, deeper understanding, sophisticated hardware and design effort. So I have started to learn Photoshop, creating my own designs for achieving the appropriate dataset required. I am still pushing my limits to achieve an aesthetically loving design created by AI, for which I have reached out to a few of my friends. The brainstorming sessions with them has been very helpful in my research and taking it forward. I am enjoying this process of trying to bring creativity and technology as close as possible, but at times it seems to go further apart. The journey continues, as the cooking of the meat has started.

Thanks to my wife for helping me with my blog. I hope that the journey gets even more interesting for me to write my second blog.